Capturing Creativity: evaluating Social-Emotional Learning in arts-based education

Anyone who has worked on evaluating arts-based interventions knows how complex it can be to measure their impact. From selecting the right assessment tools to capturing deeply personal and varied student experiences, to ensuring that social-emotional growth is meaningfully tracked – every step requires careful thought. While assessing academic progress often follows clear, standardized methods, evaluating social-emotional learning (SEL) through an arts-based intervention presents unique challenges that demand a more flexible approach in both survey design, and data collection.

As part of this effort, Populi evaluated the Art Labs program for Saturday Art Class (SArC) run at a school in Mumbai. The program aims to highlight the role of visual arts in building SEL skills through structured, arts-integrated experiences. The program focuses on fostering communication, collaboration, critical thinking, perseverance, joy, and imagination in students from 1st to 8th grade. The aim of the data collection exercise was to use an observation-based assessment tool to evaluate behavioural changes in students through visual arts engagement. Capturing subtle changes – how students express themselves, interact with peers, or persist through creative tasks – required a thoughtful balance between structure and flexibility in our evaluation approach.

This challenge raises an important question: How do we measure what truly matters in arts-based SEL interventions?

In this blog post, we share strategies we implemented to assess SEL skill development for an arts-based intervention. From designing observation-based tools to capturing subtle behavioural shifts, we navigated the challenges of measuring social-emotional growth in a creative setting. We hope our experiences offer useful insights for educators, researchers, and practitioners working to evaluate SEL in innovative and non-traditional ways.

Key Challenges:

- Standardization of tools: Assessing SEL skills through arts-based interventions requires a balance between structured evaluation and flexibility to capture individual expression. Standardization is essential to ensure consistency across different evaluators, settings, and student groups. However, given the subjective nature of SEL and creative engagement, defining clear, objective criteria for skills was a challenge but was imperative before the evaluation could begin.

- Importance of piloting tools: Given the complexity and novelty of an observation-based SEL assessment, piloting the tool was crucial. Without field testing, it would have been impossible to anticipate which questions, prompts, or observation criteria would resonate with students and which would need refining.

- Collaboration between evaluators and implementation partners: A traditional evaluator-only approach – where an external party observes and records data – was not practical in this setting. Unlike a typical survey or structured assessment, these art classes had to be actively facilitated, while the evaluator simultaneously observed, documented, and assessed student engagement in real-time.

- Creating an assessment environment which mimics an art class: An assessment, irrespective of subject, can be daunting for students. We anticipated some students to not be up to the task due to the length of the assessment (which took double the time compared to a regular art class), leading to attrition.

Balancing structured evaluation with the flexibility to capture individual expression is essential in assessing SEL through arts. While standardization ensures consistency, the subjective nature of SEL and creative engagement demands clear, objective criteria.

Our Approach

Our approach to data collection challenges to measure SEL outcomes which matter were:

Standardization of tools:

To ensure a structured yet flexible assessment, we designed scales for each question that accounted for the range of creativity and SEL skills students might demonstrate. These scales helped us capture different behaviours and approaches students took during an activity, rather than forcing their responses into rigid categories. By setting clear yet adaptable benchmarks, we could observe variations in engagement, problem-solving, and artistic expression, making the evaluation both consistent and reflective of the diverse ways students interact with art and SEL concepts.

Importance of piloting tools:

During the pilot phase, we quickly realized that some questions were too abstract for students, making it difficult for them to engage meaningfully. Similarly, some observation criteria were too broad, which might have led to inconsistencies in how evaluators interpreted student behaviours.

To address these gaps, our research associates and team lead closely observed the pilot sessions, making detailed notes on how students responded to different prompts and how well the observation criteria captured SEL behaviours. These field insights became the foundation for our brainstorming sessions, where we discussed what worked, what didn’t, and how to refine both the questions and the scales. Through this iterative process, we were able to redesign questions, narrow down observation parameters, and create a tool that was both practical for evaluators and understandable and fun for students.

Collaboration between evaluators and implementation partners:

Since facilitators were an active part of the evaluation, they had to familiarise themselves with the evaluation process. Unlike their usual sessions, where creative exploration was open-ended, the prompts and activities in the assessment were designed to elicit specific SEL behaviours. This required facilitators to strike a balance between guiding students through the activities and allowing natural interactions to unfold, ensuring that the behaviours being assessed were genuine and not influenced by external direction.

Additionally, facilitators had to be mindful of how they framed instructions and engaged with students, as their approach could impact how students responded to the activities. The evaluation also required real-time documentation of observations, field notes, and student interactions, which added another layer of complexity to the process.

Creating an assessment environment which mimics an art class:

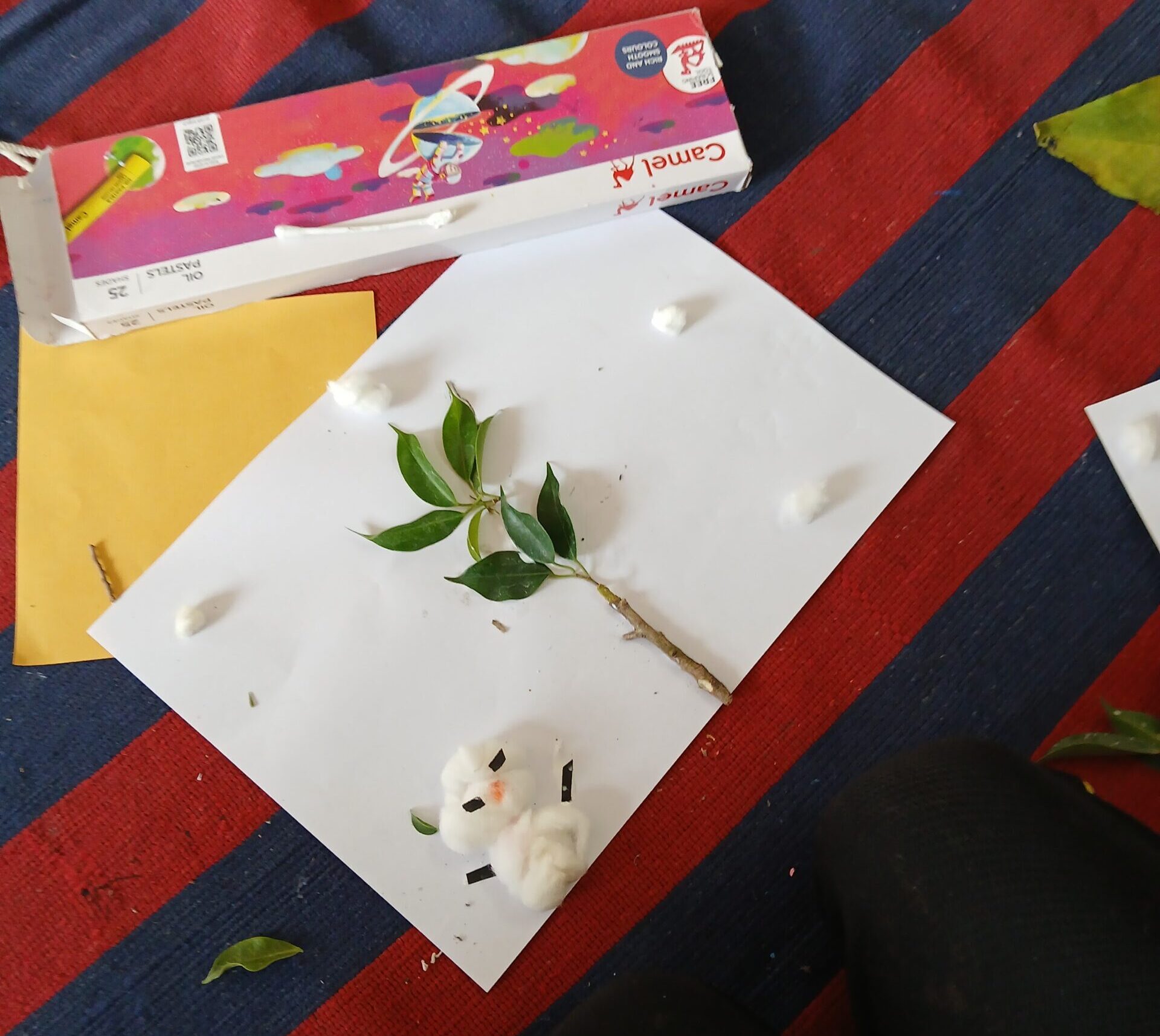

Creating a similar environment as an art class was our biggest challenge. To achieve this, we took several steps to ensure an engaging and familiar setting for students:

- Blending Sampled and Non-Sampled Students: To mimic a real classroom dynamic, we mixed sampled students with non-sampled peers. The presence of non-sampled students helped maintain the feel of a regular class while allowing the evaluator to focus on observing sample students rather than an entire group of 10–12 students in one go. This ensured that the evaluator’s cognitive load was minimized during the two-hour-plus observation, allowing for more detailed and accurate assessments.

- Providing an Open Material Station: Some activities required more materials than students were accustomed to using. To foster exploration, we set up a material station where students could freely access and experiment with different supplies.

- Encouraging Interaction: To maintain a relaxed atmosphere, students were free to participate in discussions, talk and walk around between activities, and choose where they wanted to sit.

- Incorporating Breaks: Since the assessment was longer than a typical class, students were given the flexibility to take breaks whenever needed, preventing fatigue and disengagement.

By prioritizing student comfort and autonomy, we ensured that the assessment felt less like a formal evaluation and more like an extension of their creative learning experience.

As challenges were overcome by Populi’s team and SArC’s constant help and support, this experience highlighted the importance of designing assessment approaches that are context-sensitive, student-friendly, and reflective of the diverse ways children express social-emotional skills through art.

Author:

Suparna Aggarwal

Senior Consultant, Populi Consulting

Leave a Reply